Random Assignment/Quota Balancing

The application of Random Assignment ensures a level sample playing field across all items being tested to deliver consistent analysis and insights. The only difference in results can be attributed to the stimulus, not because different audience proportions have seen different concepts or packs making results reliable and accurate. The purpose of this feature is to deliver balanced results and it is automatically applied to our concept, pack, and comms testing automated solutions. You can also tick a box on the define page to apply it to a custom survey. You can only apply it when targeting the Toluna panel sample; not your own sample.

The technique assigns respondents using randomization to ensure they have an equal chance of being placed in any group; this means your target audience is evenly represented across each concept/pack/communication being tested. A clever algorithm works behind the scenes to achieve this as soon as your survey is launched. This quota balancing takes into account the combination of demographics, profiling, and any custom target that you create, as long as you nest or interlock the quotas when you set them up. It also works for both monadic (where the respondent only sees one concept) and sequential monadic (where the respondent is rating several concepts in the same survey) options.

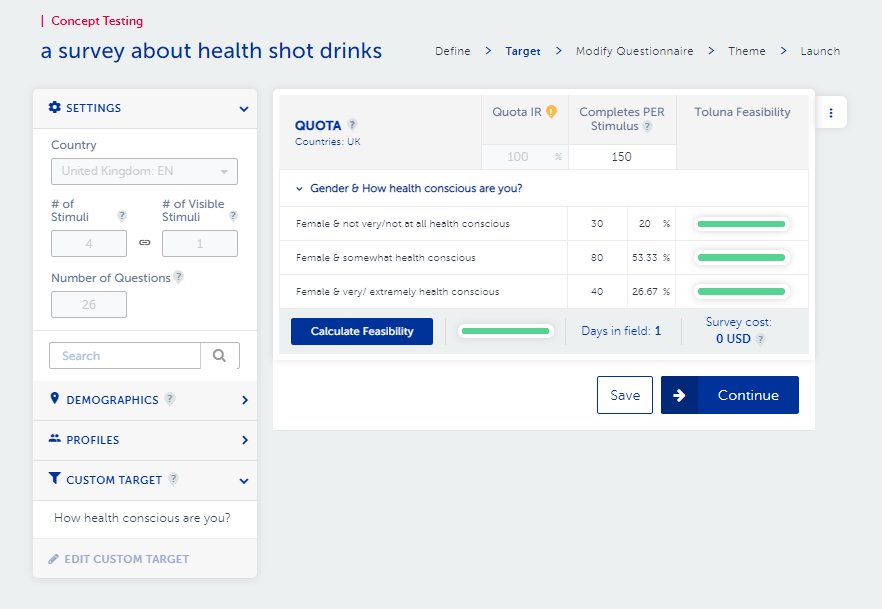

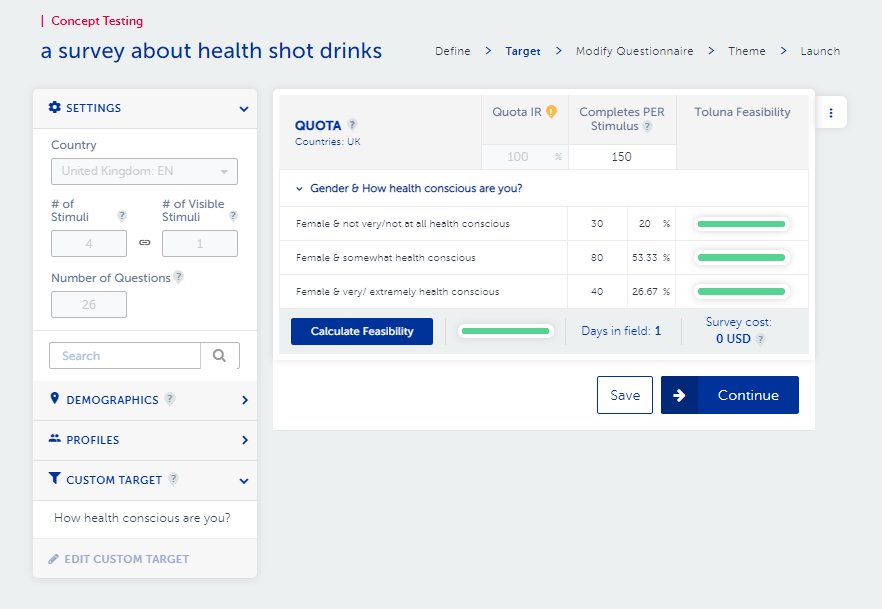

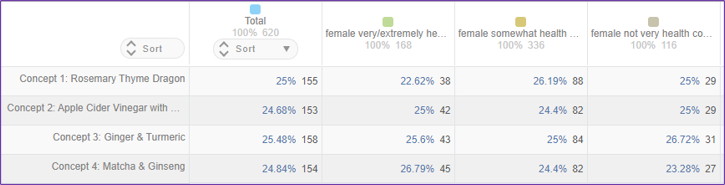

Here is an example. We have created a simple target of females who have different levels of health consciousness by interlocking a key demographic with a custom target. We set a total of 150 completed interviews per concept and allocated a specific % quota of interviews to each of the three target groups. You can pick whatever combination and distribution are needed to fit your survey. We had four different ‘health shot drink’ concepts being tested using a monadic approach.

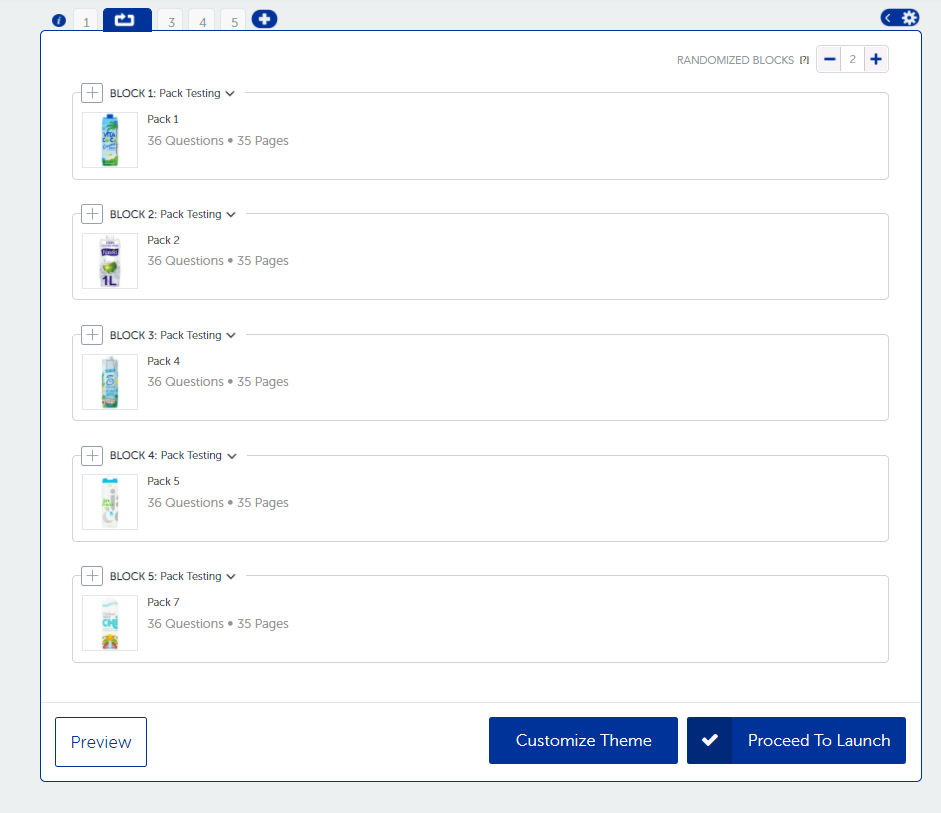

If you are adding Random Assignment to a Custom Survey, you will need to add the Random Assignment Page into the build of the questionnaire. This can be done by selecting the random assignment widget from the available questions and elements on the left-hand side of your build screen. The random assignment widget looks like this:

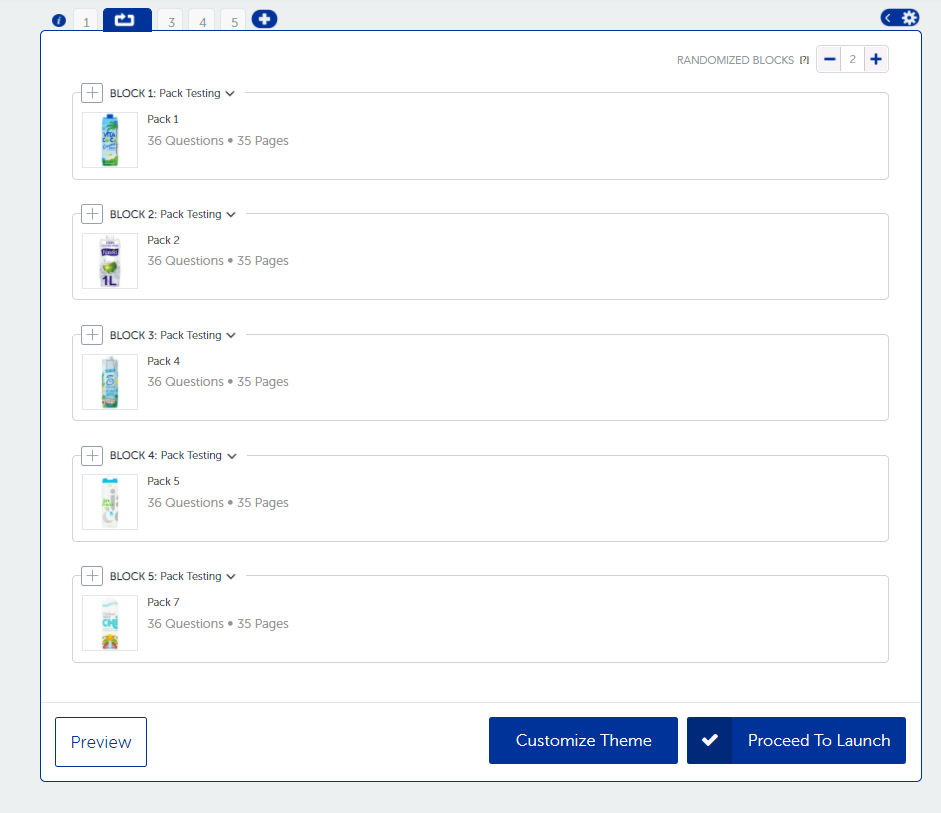

Only one random assignment module with up to 49 blocks (each block =stimulus) can be added to a survey, so once that’s been added, you will not be able to use it again.

A block should be added to represent each stimulus to be tested. You can choose to ask the same set of questions per stimulus, some of the same questions, or different questions for each of the stimuli. If you are looking to do a comparison of products, we recommend being consistent with the questions and wording you’d like to use to compare.

You can select the number of randomized blocks you’d like respondents to see, allowing for both monadic or sequential monadic preferences. With our automated solutions, this information is captured from the wizard. For custom surveys, this needs to be adjusted in the top right corner of the random assignment module (pictured below).

In the case of our Automated Solutions, the Random Assignment block will have been added for you and the questions within the block will be based on the metrics selected within your wizard. You have the option to add questions, modify or remove questions once you get to the build stage of the solutions as well.

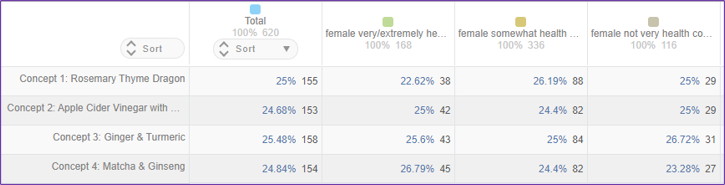

Within our real-time analytics tool, you can add the sub-populations you have targeted in the survey as a cross-break for results analysis. The targets we set out to achieve are in the quota image earlier in this document – total =150 completed interviews per concept overall and per subpopulation female extremely/very health conscious 40 completes; somewhat health-conscious 80 completes and not very health-conscious 30 completes. Here we are showing the distribution of the targets and quotas by concept that was actually achieved and it clearly shows the ‘balancing’ that has taken place.

What this means is that any differences in results across target audience sub-populations and concepts can be confidently attributed to genuine results and aren’t being negatively impacted/biased due to differences in sampling. This is an important factor given the need for high-quality surveys and results that can be relied on when making decisions. We believe this is a key point of difference from other platform competitors.

Back to Blog

Back to Blog